It isn’t an exaggeration to say that ChatGPT has taken the world by storm. It took just 5 days for the Open AI chatbot to reach one million users, which is incredibly fast. In comparison, it Netflix three and a half years, Facebook ten months, Spotify five months, and Instagram two and half months to reach the same number of users that ChatGPT got to in less than a month. We have previously examined all the ChatGPT hype and the toll itself, but OpenAI’s CEO Sam Altman recently took to Twitter himself, with a warning about his company’s own product.

Altman said in his tweet:

“ChatGPT is incredibly limited, but good enough at some things to create a misleading impression of greatness.

it's a mistake to be relying on it for anything important right now. it’s a preview of progress; we have lots of work to do on robustness and truthfulness.”

Interestingly, Altman’s comments echo our own conclusion when we looked at the new tool over a week ago. We said:

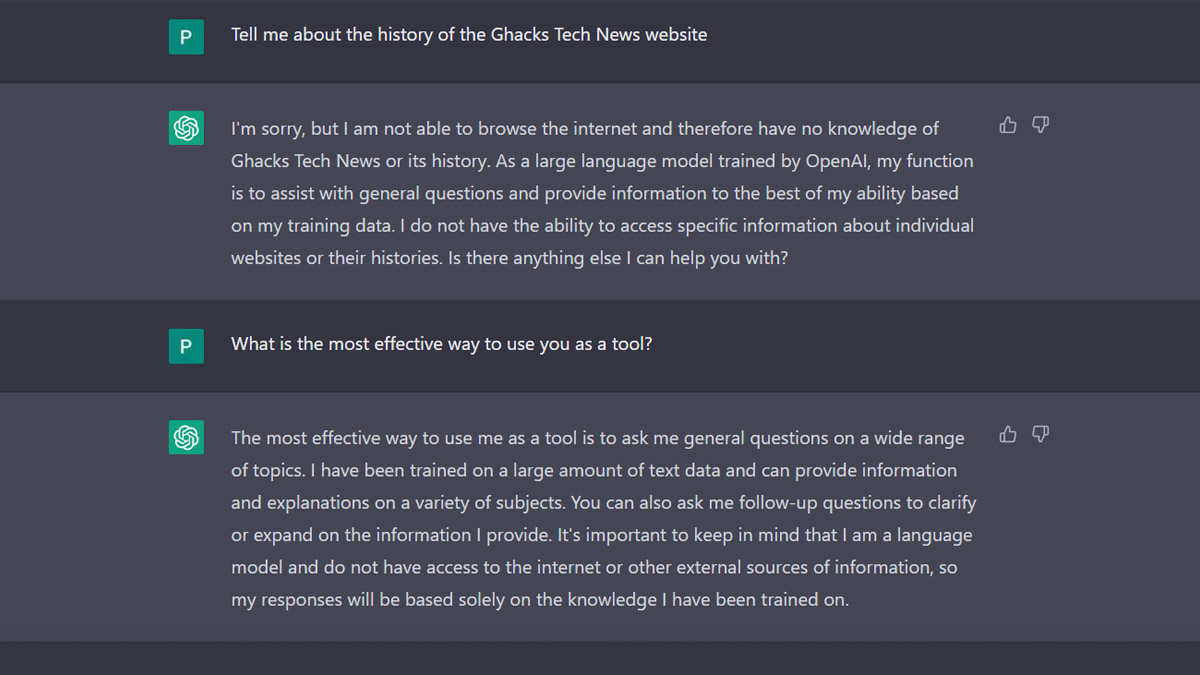

“The value of ChatGPT very much comes from how you are using it and what you are using it for. It is an incredibly useful and even entertaining tool in some instances, but you shouldn’t be leaning on it too heavily as it will likely creak and snap when put under any real pressure.”

The confirmation from Altman then, only backs up what we have already said. The tool is fun to use and is able to offer inspiration but it should not be relied upon for factual accuracy and is not a dependable ally when researching or creating outbound content. In short, the tool is a quick and reliable assistant that can point you in the right direction, but you must always take those final steps yourself.

This is a common theme with the AI-based tools we are seeing today. Two other examples, that spring to mind here are Jasper and Lex that both offer AI copywriting services. Jasper comes via a pretty hefty subscription model while Lex is free and has also been optimized for use as a Google Docs alternative on mobile. Both allow you to input various commands and prompts in a similar fashion to what you can do on ChatGPT and will then output various levels of content, automatically created based on your prompts. These are incredibly useful writing tools and can be good for quickly structuring articles and filling in sections and even paragraphs. However, the second you send out one of these AI-created pieces of writing without checking, whether that be to publish online or send as an email you are taking your credibility for granted.

That brings us to the important lesson to remember then with these tools. They are not writers, and no matter the hype, ChatGPT is no more than a language model that is leaning on training data. The key word to remember here is tools. They are new tools that offer us new ways of doing things. They are not something we can rely on to do jobs for us. These tools offer a lot of promise for the future and utility for the present, but it is important to know what they are so that you can know how to use them properly.

Thank you for being a Ghacks reader. The post The dangers of AI as told by OpenAI’s CEO appeared first on gHacks Technology News.

0 Commentaires